I have two collections. Collection 1 contains 160,000 records, while Collection 2 contains 1.7 million records. Collection 1’s IDs are used as foreign keys in Collection 2. I want to match data from both collections, but when I add a lookup, the execution time exceeds one minute. Can anyone help me with how to handle this situation? Just to clarify, both collections are on my local machine, and I’ve indexed the keys used in $match, $lookup, and $sort operations.

Have you tried running an explain on the query to see what it’s actually doing and if it’s matching the indexes.

What do your documents look like and what is your query? What indexes have you created? What version of Mongo are you running?

Without more information there is not a lot to comment on, you can create an example of your issue on Mongo playground or something so people can give feedback:

Hi @John_Sewell ,

Thanks for you reply. Really I appreciate your help . Below are the required data :

My two collections :

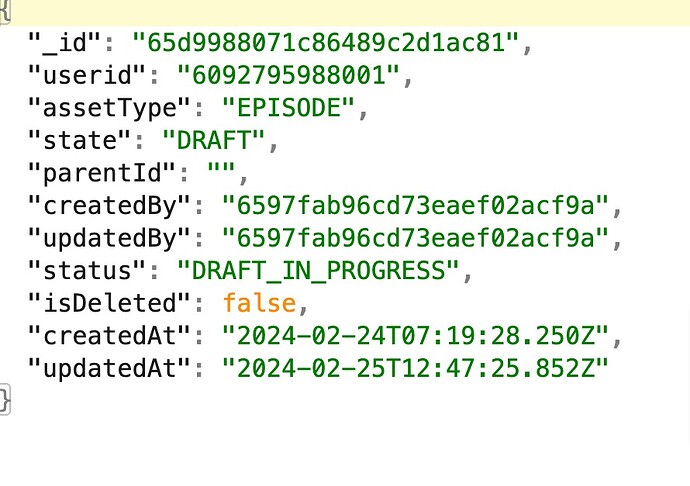

users_order collection :

{

“_id”: “65d9988071c86489c2d1ac81”,

“userid”: “6092795988001”,

“assetType”: “EPISODE”,

“state”: “DRAFT”,

“parentId”: “”,

“createdBy”: “6597fab96cd73eaef02acf9a”,

“updatedBy”: “6597fab96cd73eaef02acf9a”,

“status”: “DRAFT_IN_PROGRESS”,

“isDeleted”: false,

“createdAt”: “2024-02-24T07:19:28.250Z”,

“updatedAt”: “2024-02-25T12:47:25.852Z”

}

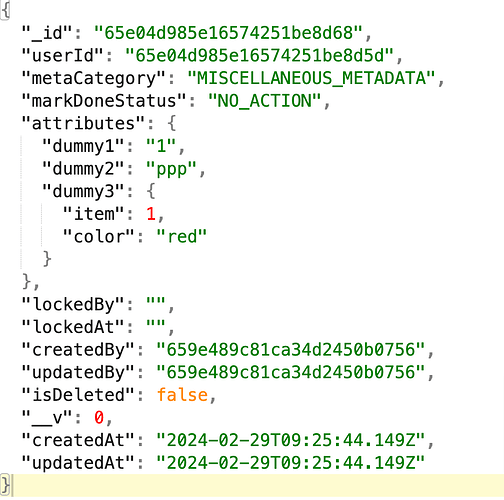

Order collection :

{

“_id”: “65e04d985e16574251be8d68”,

“userId”: “65e04d985e16574251be8d5d”,

“metaCategory”: “MISCELLANEOUS_METADATA”,

“markDoneStatus”: “NO_ACTION”,

“attributes”: {

“dummy1”:“1”,

“dummy2”:“ppp”,

“dummy3”:{

“item”:1

“color”:“red”

}

},

“lockedBy”: “”,

“lockedAt”: “”,

“createdBy”: “659e489c81ca34d2450b0756”,

“updatedBy”: “659e489c81ca34d2450b0756”,

“isDeleted”: false,

“__v”: 0,

“createdAt”: “2024-02-29T09:25:44.149Z”,

“updatedAt”: “2024-02-29T09:25:44.149Z”

}

I have already user master data. When user regsited it entered to master. But when created any order with some extradata I add in users_order collection and when order placed I have inputing it at order collection

At users_order collection I have 1.6 lakhs data and orders colelction I have 2millions of data.

This db structure is designed before. I can’t restructured in this moment

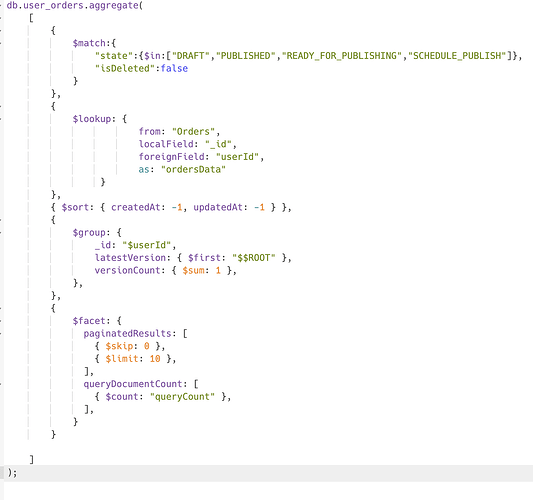

My query :

db.user_orders.aggregate(

[

{

$match:{

“state”:{$in:[“DRAFT”,“PUBLISHED”,“READY_FOR_PUBLISHING”,“SCHEDULE_PUBLISH”]},

“isDeleted”:false

}

},

{

$lookup: {

from: “Orders”,

localField: “_id”,

foreignField: “userId”,

as: “ordersData”

}

},

{ $sort: { createdAt: -1, updatedAt: -1 } },

{

$group: {

_id: “$userId”,

latestVersion: { $first: “$$ROOT” },

versionCount: { $sum: 1 },

},

},

{

$facet: {

paginatedResults: [

{ $skip: 0 },

{ $limit: 10 },

],

queryDocumentCount: [

{ $count: “queryCount” },

],

}

}

]

);

I have created index {state, isDeleted}

$userId

It’s execution time before group by is 2 secs but after group, it’s execution time 6sec. which is not working for me.

Hope I am able to explain your query.

Thank You

Team,

any update from any one?

I’m sure you’ll get some replies today, given that the last two days have been a weekend.

How many documents enter the group stage? You are grouping on $userid but have no index or sorting in your query so it will need to scan / sort the data so that it can perform the grouping of data.

Overall you’re trying to get list of users along with their most recent orders? Something else to think about is it you need all fields or can $project it down to the absolute needed when pushing through pipeline stages.

Hi Pradyumna,

As mentioned by @John_Sewell , the query performance will increase if you place an index on userId field. One more thing I would like to point out is the order of stages in your pipeline, as far as i can understand you are first performing a lookup on all user_orders with orders and then limiting the user_orders to only the latest for each user, making most of the performed lookups obsolete. It is recommended that you first perform the group operation and then the lookup, it will then only perform it for all the required user_orders hence increasing the query performance.

The resultant query would somewhat look like this -

[

{

$match: {

"state": {

$in: [“DRAFT”,“PUBLISHED”,“READY_FOR_PUBLISHING”,“SCHEDULE_PUBLISH”]

},

"isDeleted": false

}

},

{

$sort: {

"createdAt": -1,

"updatedAt": -1

}

},

{

$group: {

_id: "$userId",

"latestVersion": {

"$first": "$$ROOT"

},

"versionCount": {

$sum: 1

}

}

},

{

"$lookup": {

"from": "Orders",

"localField": "latestVersion._id",

"foreignField": "userId",

"as": "order_data"

}

},

{

$facet: {

"paginatedResults": [

{

$skip: 0

},

{

$limit: 10

}

],

"queryDocumentCount": [

{

$count: "queryCount"

}

]

}

}

]

Good point on the ordering of stages, if you’re filtering out data, no point running a lookup on it when you don’t need that data!

@Akshat_Gupta3

Thanks for your reply. I had also tried with first group by and then added look up , it was taking approx 3 secs then I have tried the shared query.

It is taking approx 5secs but I want the query within a sec. Can it be possible?

Have you applied index on userId field?

You have a filter and a sort, can you try combining into an index so that one index covers both requirements? That should make the initial data fetch very quick.

After adding this index it reduced now facing another issue. when I am using facet to return pagination data and total count , then again getting 5sec execution time.

{

$facet: {

paginatedResults: [

{ $skip: 0 },

{ $limit: 10 },

],

queryDocumentCount: [

{ $count: "queryCount" },

],

},

},

yes, i have applied userId

any update from anyone ?

Hi @Pradyumna_Mishra

Can you please share the query execution stats again with all the query optimisations discussed above so that we can then identify what is the exact stage that is taking time in execution.

This topic was automatically closed 5 days after the last reply. New replies are no longer allowed.