We are experiencing occasional but significant delays in reading collections with one or very few documents that are also very small.

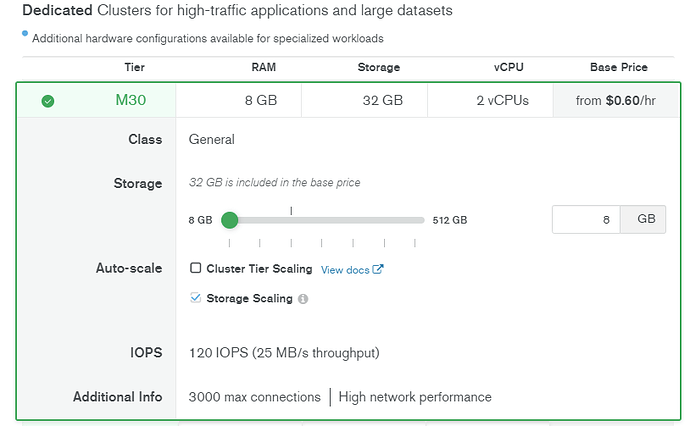

We are connecting from a .NET Core App Service from Azure Texas to a M30 Atlas cluster in the same region.

The most frequent stack trace ending is:

Server+ServerChannel+<ExecuteProtocolAsync>d__33`1.MoveNextServer+ServerChannel+<ExecuteProtocolAsync>d__33`1.MoveNext Expanded

mongodb.driver.core⭙

CommandWireProtocol`1.ExecuteAsyncCommandWireProtocol`1.ExecuteAsync Expanded

mongodb.driver.core⭙

CommandUsingCommandMessageWireProtocol`1.ExecuteAsyncCommandUsingCommandMessageWireProtocol`1.ExecuteAsync Expanded

mongodb.driver.core⭙

Framework/Library AsyncMethodBuilderCore.StartFramework/Library AsyncMethodBuilderCore.Start Expanded

system.private.corelib.il⭙

CommandUsingCommandMessageWireProtocol`1+<ExecuteAsync>d__19.MoveNextCommandUsingCommandMessageWireProtocol`1+<ExecuteAsync>d__19.MoveNext Expanded

mongodb.driver.core⭙

ExclusiveConnectionPool+AcquiredConnection.ReceiveMessageAsyncExclusiveConnectionPool+AcquiredConnection.ReceiveMessageAsync Expanded

mongodb.driver.core⭙

BinaryConnection.ReceiveMessageAsyncBinaryConnection.ReceiveMessageAsync Expanded

mongodb.driver.core⭙

Framework/Library AsyncMethodBuilderCore.StartFramework/Library AsyncMethodBuilderCore.Start Expanded

system.private.corelib.il⭙

BinaryConnection+<ReceiveMessageAsync>d__57.MoveNextBinaryConnection+<ReceiveMessageAsync>d__57.MoveNext Expanded

mongodb.driver.core⭙

BinaryConnection.ReceiveBufferAsyncBinaryConnection.ReceiveBufferAsync Expanded

mongodb.driver.core⭙

Framework/Library AsyncMethodBuilderCore.StartFramework/Library AsyncMethodBuilderCore.Start Expanded

system.private.corelib.il⭙

BinaryConnection+<ReceiveBufferAsync>d__55.MoveNextBinaryConnection+<ReceiveBufferAsync>d__55.MoveNext Expanded

mongodb.driver.core⭙

BinaryConnection.ReceiveBufferAsyncBinaryConnection.ReceiveBufferAsync Expanded

mongodb.driver.core⭙

Framework/Library AsyncMethodBuilderCore.StartFramework/Library AsyncMethodBuilderCore.Start Expanded

system.private.corelib.il⭙

BinaryConnection+<ReceiveBufferAsync>d__54.MoveNextBinaryConnection+<ReceiveBufferAsync>d__54.MoveNext Expanded

mongodb.driver.core⭙

StreamExtensionMethods.ReadBytesAsyncStreamExtensionMethods.ReadBytesAsync Expanded

mongodb.driver.core⭙

Framework/Library AsyncMethodBuilderCore.StartFramework/Library AsyncMethodBuilderCore.Start Expanded

system.private.corelib.il⭙

StreamExtensionMethods+<ReadBytesAsync>d__4.MoveNextStreamExtensionMethods+<ReadBytesAsync>d__4.MoveNext Expanded

mongodb.driver.core⭙

StreamExtensionMethods.ReadAsyncStreamExtensionMethods.ReadAsync Expanded

mongodb.driver.core⭙

Framework/Library AsyncMethodBuilderCore.StartFramework/Library AsyncMethodBuilderCore.Start Expanded

system.private.corelib.il⭙

StreamExtensionMethods+<ReadAsync>d__1.MoveNextStreamExtensionMethods+<ReadAsync>d__1.MoveNext Expanded

mongodb.driver.core

Framework/Library SslStream.ReadAsyncFramework/Library SslStream.ReadAsync Collapsed

system.net.security.il⭙

-Mongo client is used as singleton (was already used).

-Resources are ok both at App Service and Cluster.

Any suggestion would be welcome.